Web scraping represents a vital method that allows users to extract data from websites thus providing insights to businesses researchers and developers who work with online material. The process of scraping complex websites becomes difficult because their content requires JavaScript for successful rendering. Headless browsers present themselves as an effective solution that resolves web scraping problems by delivering efficient web scraping features.

Understanding Headless Browsers

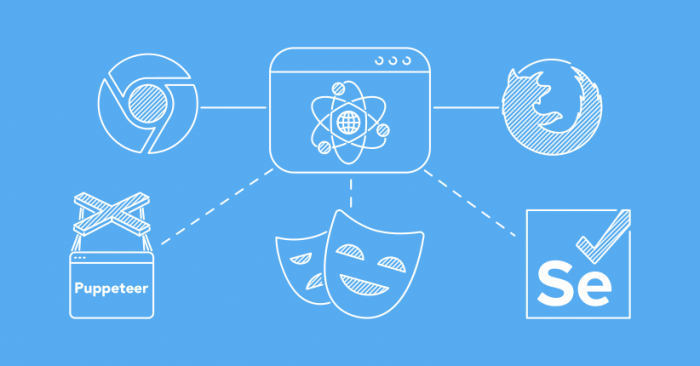

The operation of a headless browser follows a traditional browser format yet lacks the visual components users see in GUIs. Through this functionality the browser executes web page loading operations as well as page rendering activities and interactive commands without creating a visual presentation. The most widely used platforms for headless browsing consist of Chrome in headless mode together with Firefox headless and PhantomJS. The tools enable web scraping scripts to retrieve data from dynamic pages which heavily depend on JavaScript functionalities.

The main benefit of using headless browsers pertains to sites which deploy anti-scraping techniques. These browsers run JavaScript and generate visual output just like conventional browsers thus reducing the chances that basic anti-scraping systems will detect them. A web scraping service can retrieve elaborate data that is stored behind scripts and asynchronous loading methods due to this functionality.

Using Headless Browsers for Web Scraping

The implementation of web scraping through headless browsers requires developers to combine framework tools such as Selenium or Puppeteer. Selenium operates as a popular automation platform which allows multiple browsers to work in headless mode yet Puppeteer serves as a Node.js library that focuses on Chrome control. With these programs developers execute automated control of headless browsers to run complex web-page data extraction and simulate user simulation functions.

The implementation of headless browsers enables developers to handle websites which perform dynamic content loading through AJAX. Headless browsers successfully capture all elements after completion since they wait for page content loading while standard scraping may not receive all intended data during automated web scraping procedures. This functionality of behavioral simulation serves as a vital requirement when extracting data from interactive modern websites.

Advantages and Challenges of Headless Browsers

Headless browsers provide maximum efficiency as their primary benefit. The lack of graphical user interface allows these browsers to function efficiently which results in greater speed and efficiency during large-scale web scraping operations. The server environment allows these applications to function effectively without graphical requirements which optimizes their resource allocation.

The implementation of headless browsers brings along several execution difficulties to the table. Websites defend against scraping by employing sophisticated systems which check browser fingerprints while also noticing automatic user operations. Developers need to exercise caution in their headless browser configuration because improper setup may reveal automated behavior to websites. The mitigation of detection risks can be achieved through delay implementation and random mouse actions and user-agent string rotation.

Best Practices for Effective Web Scraping with Headless Browsers

The achievement of optimal headless browser functioning requires developers to implement established practices at all times. You must abide by website terms along with legal requirements before starting any scraping operation. Proxy usage helps distribute server requests while stopping servers from becoming overloaded thus preventing IP blocking.

Selenium and Puppeteer require precise script writing ability to retrieve content that dynamically loads on web pages. The process of data extraction will be more successful when scripts automatically wait for distinct elements to become visible. This approach prevents both incomplete and inaccurate scraping outcomes. You should develop automatic error management systems which deal with specialized problems such as page timeout or unexpected website alterations.

Headless browsers offer an effective method to obtain data through dynamic websites that also use JavaScript. The combination of automation frameworks with Selenium or Puppeteer enables efficient navigation through complex pages and execution of scripts and valuable information extraction procedures. The implementation of best practices in web scraping enables companies to achieve successful projects even though resource management and detection avoidance present continuous challenges. Organizations which require vast data acquisition should utilize professional web scraping services to achieve high accuracy along with compliance standards.

Post Comment

Be the first to post comment!

Related Articles